Hierarchical Reasoning Model (HRM)

Reasoning, the process of devising and executing complex goal-oriented action sequences, remains a critical challenge in AI. Current large language models (LLMs) primarily employ Chain-of-Thought (CoT) techniques, which suffer from brittle task decomposition, extensive data requirements, and high latency.

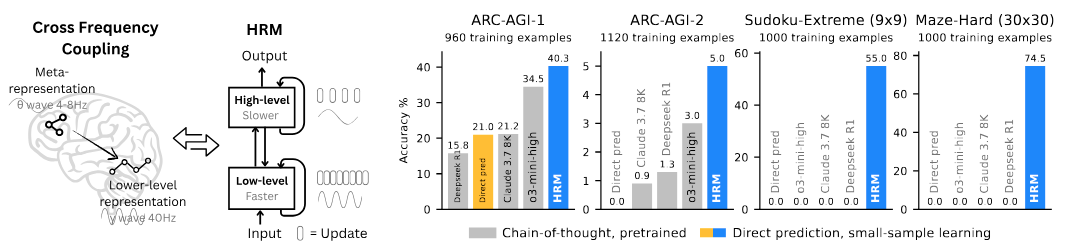

Inspired by the hierarchical and multi-timescale processing in the human brain, the Hierarchical Reasoning Model (HRM) is a novel recurrent architecture that attains significant computational depth while maintaining both training stability and efficiency. HRM executes sequential reasoning tasks in a single forward pass without explicit supervision of the intermediate process. This is achieved through two interdependent recurrent modules:

- A high-level module responsible for slow, abstract planning.

- A low-level module handling rapid, detailed computations.

With only 27 million parameters, HRM achieves exceptional performance on complex reasoning tasks using as few as 1000 training samples. The model operates without pre-training or Chain-of-Thought (CoT) data, yet achieves nearly perfect performance on challenging tasks including complex Sudoku puzzles and optimal pathfinding in large mazes.

Furthermore, HRM outperforms much larger models with significantly longer context windows on the Abstraction and Reasoning Corpus (ARC), a key benchmark for measuring artificial general intelligence capabilities. These results underscore HRM’s potential as a transformative advancement toward universal computation and general-purpose reasoning systems.

Key Features

- Efficient Reasoning: Solves complex problems in a single forward pass.

- Hierarchical Architecture: Employs high-level and low-level recurrent modules for planning and execution.

- Sample Efficient: Achieves high performance with a small number of training examples (e.g., 1000).

- No Pre-training Required: Trains from scratch without the need for large, general-purpose datasets.

- State-of-the-Art Performance: Outperforms larger models on benchmarks like ARC and masters tasks like Sudoku and maze solving.

Citation

If you use this work, please cite the following paper:

@misc{wang2025hierarchicalreasoningmodel,

title={Hierarchical Reasoning Model},

author={Guan Wang and Jin Li and Yuhao Sun and Xing Chen and Changling Liu and Yue Wu and Meng Lu and Sen Song and Yasin Abbasi Yadkori},

year={2025},

eprint={2506.21734},

archivePrefix={arXiv},

primaryClass={cs.AI},

url={https://arxiv.org/abs/2506.21734},

}